PandasAI : Merging generative artificial intelligence capabilities into Pandas

Rise of Prompt Engineering and its tools :

Prompt engineering is the process of designing and optimizing the prompts used by language models to generate text. It has become increasingly important in recent years as language models like GPT-3 have become more powerful and widely used.

The rise of prompt engineering can be attributed to several factors. First, as language models have grown larger and more complex, it has become more difficult to fine-tune them for specific tasks. Prompt engineering provides a way to design prompts that help the model generate high-quality text for specific tasks.

Second, prompt engineering has become more important as language models have been used in a wider variety of applications. For example, in the field of natural language processing, prompt engineering has been used to improve language models' performance in tasks such as text classification, sentiment analysis, and question-answering.

Third, prompt engineering has become more popular as a way to improve the interpretability and controllability of language models. By designing prompts that elicit specific types of responses, researchers can gain a better understanding of how language models work and how to optimize them for specific applications.

Overall, prompt engineering has emerged as a key tool in the development and deployment of language models. As language models continue to grow in complexity and usage, prompt engineering is likely to become even more important in the years ahead.

One such tool which I'm going to discuss today is PandasAI

Pandas AI is a Python library that adds generative artificial intelligence capabilities to Pandas, the popular data analysis and manipulation tool. It is designed to be used in conjunction with Pandas and is not a replacement for it. It makes Pandas conversational, allowing you to ask questions about your data and get answers back, in the form of Pandas DataFrames.

For example, you can ask PandasAI to find all the rows in a DataFrame where the value of a column is greater than 5, and it will return a DataFrame containing only those rows:

import pandas as pd

from pandasai import PandasAI

# Sample DataFrame

df = pd.DataFrame({

"country": ["United States", "United Kingdom", "France", "Germany", "Italy", "Spain", "Canada", "Australia", "Japan", "China"],

"gdp": [19294482071552, 2891615567872, 2411255037952, 3435817336832, 1745433788416, 1181205135360, 1607402389504, 1490967855104, 4380756541440, 14631844184064],

"happiness_index": [6.94, 7.16, 6.66, 7.07, 6.38, 6.4, 7.23, 7.22, 5.87, 5.12]

})

# Instantiate a LLM

from pandasai.llm.openai import OpenAI

llm = OpenAI()

pandas_ai = PandasAI(llm)

pandas_ai.run(df, prompt='Which are the 5 happiest countries?')

As we can see , instead of writing stringent pandas code we are just passing the prompt for the result we want in it

The output

6 Canada

7 Australia

1 United Kingdom

3 Germany

0 United States

Name: country, dtype: object

Of course, you can also ask PandasAI to perform more complex queries. For example, you can ask PandasAI to find the sum of the GDPs of the 2 unhappiest countries:

pandas_ai.run(df, prompt='What is the sum of the GDPs of the 2 unhappiest countries?')

Output :

19012600725504

You can also use PandasAI for Data Visualisation:

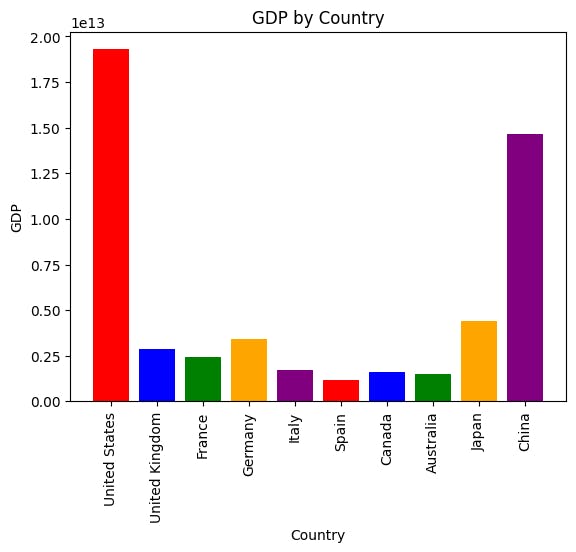

pandas_ai.run(

df,

"Plot the histogram of countries showing for each the gpd, using different colors for each bar",

)

Output :

Histogram Plot without using matplotlib or seaborn. The result is produced only via passing prompt

Installation

pip install pandasai

Privacy & Security

In order to generate the Python code to run, we take the dataframe head, we randomize it (using random generation for sensitive data and shuffling for non-sensitive data) and send just the head.

Also, if you want to enforce further your privacy you can instantiate PandasAI with enforce_privacy = True which will not send the head (but just column names) to the LLM.

Environment Variables

In order to set the API key for the LLM (Hugging Face Hub, OpenAI), you need to set the appropriate environment variables. You can do this by copying the .env.example file to .env:

cp .env.example .env

Then, edit the .env file and set the appropriate values.

As an alternative, you can also pass the environment variables directly to the constructor of the LLM:

# OpenAI

llm = OpenAI(api_token="YOUR_OPENAI_API_KEY")

# Starcoder

llm = Starcoder(api_token="YOUR_HF_API_KEY")

Check out their Github repository and proceed with PandasAI

Gabriele Venturi built this awesome stuff for you to use!